Last weekend I was at the tenth Pentaho Community Meeting (PCM) in Mainz. It is always a meeting with lots of fun, but also lots of interesting talks and discussion. One of the talk during the last PCM was by Caio Moreno de Souza about Auto machine learning (or autoML). Very simple explained: with machine learning, you give the computer data and it creates and validate a model, so you can predict the ‘future’.

His presentation and discussing about it during PCM17 got my brains spinning at large speed. (As you might now my background is a nice combination of statistics (human science), programming, but rather new when it comes to machine learning).

The data versus business gap

The data versus business gap

At this moment I think autoML in the sense like above model is not going to work. I think we need some information to determine which algorithm(s) (and parameters to feed these algorithms) to use. But I think autoML or maybe we should call it easyML is needed to fill some gap:

On one side, we have the data guys: very good in manipulation data, actually should have a very basic understanding about statistics (at least measurement level), but are often missing or ignoring this background.

On the other side we have the business guys: they have ‘domain information’, they know a lot about the subject, preferably have some understanding of the data, but especially how it is linked to the subject. They also have at most some basic understanding about statistics.

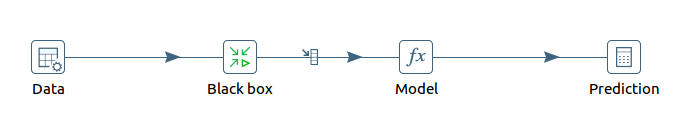

In between you have the machine learning tools. Even if they are easy to use (like the black box above (hmm autoweka seems to implement this black box)), which is able to select the ‘best’ algorithm), we still have a gap.

The machine learning gap

With a little bit of training/documentation you might be able to let the data guys perform the analysis and to some extend interpret the results. And the business guys should be able to face validate the resulting model. But both of them don’t know which algorithms to choose. You should have some statistical/methodological understanding to choose the proper algorithms. You can not use all algorithms for each problem. Trend analysis needs other algorithms than classification analysis. But maybe more important for some (classification) problems (eg recurrent cancer) you rather not miss recurrence, but if you classify non recurrence as recurrence is not as bad. In this case (eg recurrence of breast cancer): the recall on recurrence event should be high.

The solution

I think it is not desirable/needed to train one of these sides to be able to pick the appropriate algorithms and select the correct parameters. But I think we should be able to create more awareness about the different kinds of machine learning problems and the outcome you wish to optimize, so you can provide information to the black box to create methodological valid and for the business interesting models. But of course the black box should be able to use this information in the model selection. Maybe with Autoweka this is possible, but that I need to investigate

I’m looking forward to help close the machine learning gap and with that the gap between the business guys and the data guys.